The Challenges

The top retailer in the US faced challenges with scalability, maintenance, performance, data recovery, disaster recovery, increased operational overheads, and governance while running the data on legacy data warehouses.

Moreover, ensuring a reduction in application costs and processing time was essential. To overcome these challenges, the client required solutions for establishing a consolidated Modern Data Platform on to Google Cloud Platform.

The Objective

To migrate the complex on-premise Teradata, two Hadoop clusters, and an existing clickstream solution on Azure to Google Cloud Platform.

Further leveraging the performance of BQ to deliver a solution that will streamline the retail operational functions across the network.

Solutions

- Google recommended Datametica as a migration partner to the client to establish a consolidated Modern Data Platform on to Google Cloud Platform.

- Using Eagle – Data Migration Planning Product: Our automated assessment product, we first analyzed the existing data warehouse, created a well-planned strategy with optimized data model recommendations. This helped in setting up a successful foundation for migration to GCP within weeks.

- We then used Raven – Automated Workload Conversion Product: Our code conversion product to translate the source workloads (ETL/ELT) to target workloads (ETL/ELT). This saved 70% of time as compared to traditional methods of data conversion and translation. Raven based truly automated translation, provides a uniform, clean and highly maintainable translated code-base.

- Using Pelican – Automated Data Validation product: Our automated product for data validation, we ensured confidence in decommissioning existing legacy warehouses. The overall reconciliation process was completed with greater efficiency, granular comparison, zero data copy, no coding and by using faster techniques.

- One of the key challenges of this migration to GCP was integration of applications hosted on Mainframe. Datametica Mainframe parser was heavily used to integrate more than 1,000 mainframe feeds including packed binary coded decimal files.

- End-to-End DevOps services was another key deliverable of this mammoth migration program. Datametica built systems and processes for environment provisioning & setup, development/delivery pipeline, CI/CD based deployment pipelines and monitoring & alerting mechanism.

- With our unique product suite and deep expertise in GCP, the task was accomplished 12 times faster compared to traditional methods.

Datametica Accelerators Used

GCP Products We Used

The following GCP products helped Datametica in establishing a single environment for a systematic workflow:

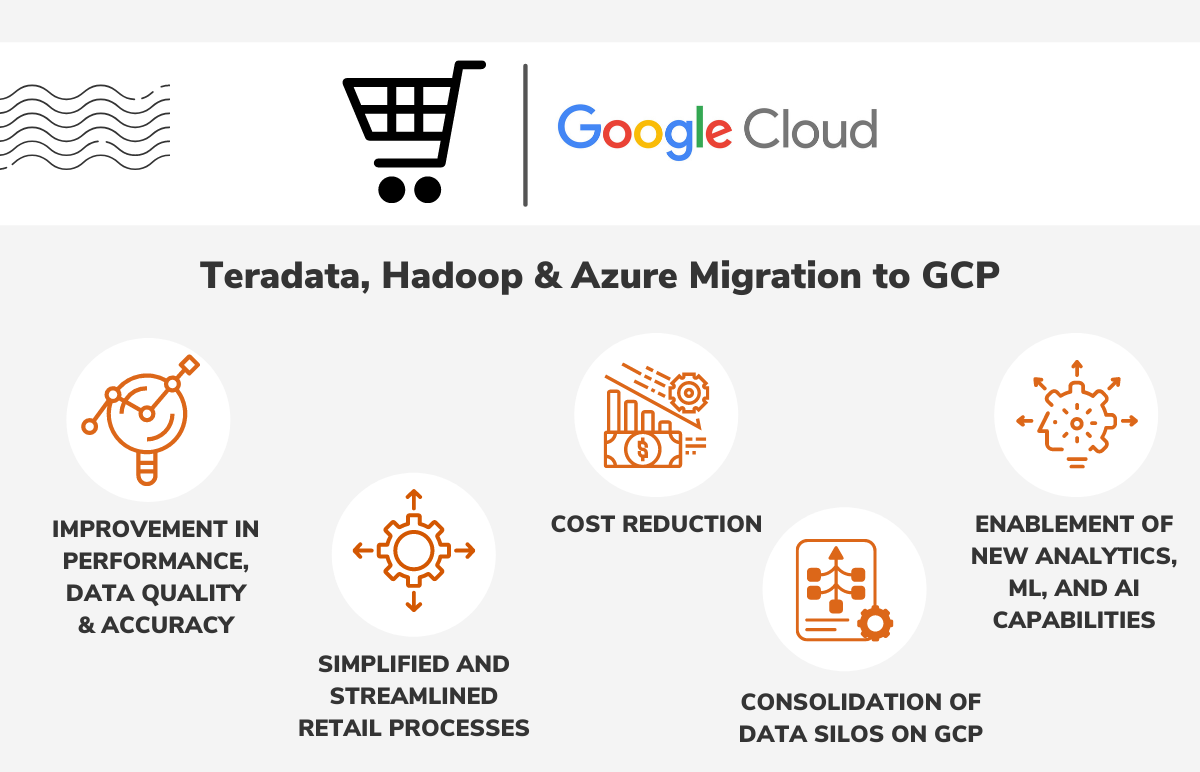

Client Benefits

Faster and cost-effective Teradata migration to GCP together with Hadoop and Azure migration using Datametica migration product suite.

Our solution resulted in:

- Improvement in overall performance

- Reduction in overall cost

- Consolidation of data silos on GCP

- Enablement of new analytics, ML, and AI capabilities

- Simplified and streamlined retail processes

- Disaster Recovery options that GCP provides

- Improvement in data quality and accuracy, making it easier to access and analyze it.

Recommended for you

subscribe to our case study

let your data move seamlessly to cloud