Is this a UI-based tool?

Yes, Pelican is a zero coding web UI tool that makes the interaction between the software and the user seamless.

Can Pelican be used as an enterprise tool in my organization?

- Sample data encryption (AES 256)

- User authentication

- Customer managed – Using LDAP/AD integration (individual user & group)

- Product managed – Using SMTP integration

- SSL compatibility

- Multiple deployment options

- Programmatic interfaces (APIs)

- Code migration utility

- Backup solutions and utilities to eliminate Pelican metadata loss

- Detail logging framework

- Access and Role management module

- SDLC adoption and vulnerability testing using market leading scanning tools

Do I need to know coding to use Pelican?

Datametica’s Pelican is a state-of-the-art data validation technology that requires ZERO coding. Even a non-technical person can run the validations, understand the reports, and perform bug triage as per the tool’s recommendations.

What types of Validation Pelican does?

Datametica’s Pelican performs various types of cell-level validation like Data, Metadata, and Scope.

- Data

- Cell level comparison between two tables. Two modes available:

- Litmus – Quick check

- Full – Comprehensive check

- Identification of all columns with mismatching cells in single execution

- Samples of mismatches

- Cell level comparison between two tables. Two modes available:

-

-

- Mismatch data reconciliation between two tables. Parameters:

- Mismatched rows

- Missing / Extra rows

- Duplicate rows

- Mismatch data reconciliation between two tables. Parameters:

-

- Metadata

- Column positional ordering

- Data type

- Scope

- Full table

- Incremental data

How do you make sure that Pelican is safe to get into my company's network?

Pelican product development follows an extensive SDLC process. This includes testing all the integration points to ensure the highest standards of security compliance, for e.g., vulnerability scans, metadata and data security, network security, and user security.

Pelican is an enterprise application deployed in the customer network for data validation. It is used only by the employees and partners who have secured access to the network. PELICAN does not need access to any outside network. Pelican access is controlled by a ground delivery team, which is covered by an NDA agreement with customers.

What are the infrastructural requirements if I want to use Pelican?

The recommended baseline infrastructure configuration required to run Pelican effortlessly is a Docker or GKE machine equivalent to a machine type of n1-standard-32 or higher.

Will Pelican impact my source and target data platforms while performing validation?

Pelican runs simple SQL queries on both Source and Target platforms. Usually the Pelican user is allocated a specific capacity and resources to avoid impacts to existing workloads.

How does Pelican handle different data types?

Pelican has an inbuilt intelligent algorithm that identifies data type difference between two platforms and utilizes options like expression and user inputs to make sure they are handled correctly for validation.

Can I interact programmatically with Pelican?

Yes, Pelican offers the ability to interact programmatically with the help of APIs. APIs can be used to configure, manage and process table validations within Pelican from the customer’s enterprise scheduler.

What are the operating costs of Pelican?

- Cost of the Pelican machine

- Select query costs on the DB platforms

What support is available if I buy a licensed product?

- Demo and best practices sessions

- Tool deployment assistance

- Licensing

- Upgrades

- Bugs and Enhancements support

- Critical and High Vulnerability Mitigation

- Dedicated SME support (based on licensing model)

- Tool support for issues like service outages, performance issues, unknown errors, etc.

How can Pelican ensure data security during a data validation check?

Pelican offers high data security during data validation as it doesn’t move the actual data on either the source or target over the network for comparison. It uses hashing mechanisms that enable it to validate without actually moving data or creating copies of the existing data, i.e., zero data movement. This helps save valuable storage space, network bandwidth and reduces data leakage risk. Read our case study about a similar use case here.

What source and target databases does Pelican support?

As mentioned on our Pelican product page, the following are the source and target pairs that are supported by Pelican:

| Source | Target |

|---|---|

| Teradata | Big Query |

| Netezza | Big Query |

| Hive | Big Query |

| Oracle | Big Query |

| BigQuery | Big Query |

| DB2 | Big Query |

| Oracle | Hive |

| Teradata | Hive |

| Netezza | Hive |

| Hive | Hive |

| Big Query | Hive |

| Vertica | Big Query |

| Teradata | Snowflake |

| Teradata | Synapse |

| Netezza | Snowflake |

| Netezza | Synapse |

| Ms SQL Server | Hive |

| Ms SQL Server | Big Query |

| Greenplum | Redshift |

| Hive | Delta Lake |

| Hive | Synapse |

| Snowflake | Big Query |

| Teradata | Delta Lake |

| Redshift | Big Query |

| Ms SQL Server | Ms SQL Server |

| Oracle | PostgreSQL |

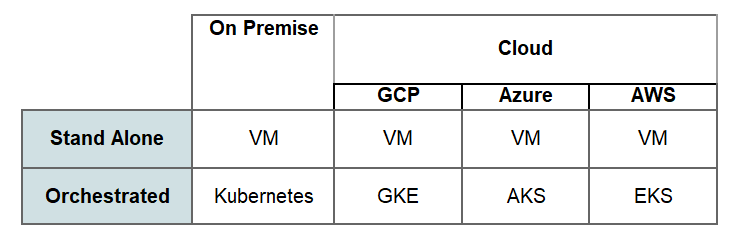

Where would the PELICAN tool be deployed Cloud or On-prem?

There are various Pelican deployment options based on the source and target data warehouses. Here is the list:

What is the frequency of standard software updates – options being Monthly, Quarterly, Half-Yearly, Annual?

- Functionality

- Security

- Performance

- Technical Debt

- Bug fixes

- Documentation

- Miscellaneous

about datametica

Datametica is a global leader in data warehouse migration and modernization to the cloud. We empower businesses by migrating their Data/Workload/ETL/Analytics to the Cloud by leveraging Automation. Through their automated products: Eagle – Data warehouse Assessment & migration Planning Product, Raven – Automated Workload Conversion product and Pelican – Automated Data Validation Tool, Datametica automates and accelerates data migration to the cloud enabling us to remove anxiety from the migration process, making it Faster, with Greater Accuracy, Lesser Risk, and at more Competitive Cost.

We expertise in transforming legacy Teradata, Oracle, Hadoop, Netezza, Vertica, Greenplum along with ETLs like Informatica, Datastage, AbInitio & others, to cloud-based data warehousing with other capabilities in data engineering, advanced analytics solutions, data management, data lake implementation and cloud optimization.