The Objective

Top retailers in the US wanted to perform data validation during data warehouse migration to GCP. Initially, they requested 25 BQ engineers for it but it was demanding a high investment of over 3.4 million dollars.

The Challenges

25 BQ engineers would have been responsible for manual data validation. They would be performing only sample testing (not on the complete database). and they wouldn’t be able to perform multiple instances of data validation.

Solutions

Datametica used Pelican for the validation and reconciliation of the data during the migration to GCP process. Pelican is a unique product to validate and reconcile Petabyte-scale of data at the cell level and across heterogeneous systems to automate, accelerate and simplify the data validation process.

Key features of Pelican:

- Automation: Automated data validation & reconciliation

- Zero Factor: No coding requirement & data movement

- Accurate: Intelligent comparison at cell level

- Parallel run: Validation during migration

- Insights: Detailed reports identifying discrepancies

Benefits

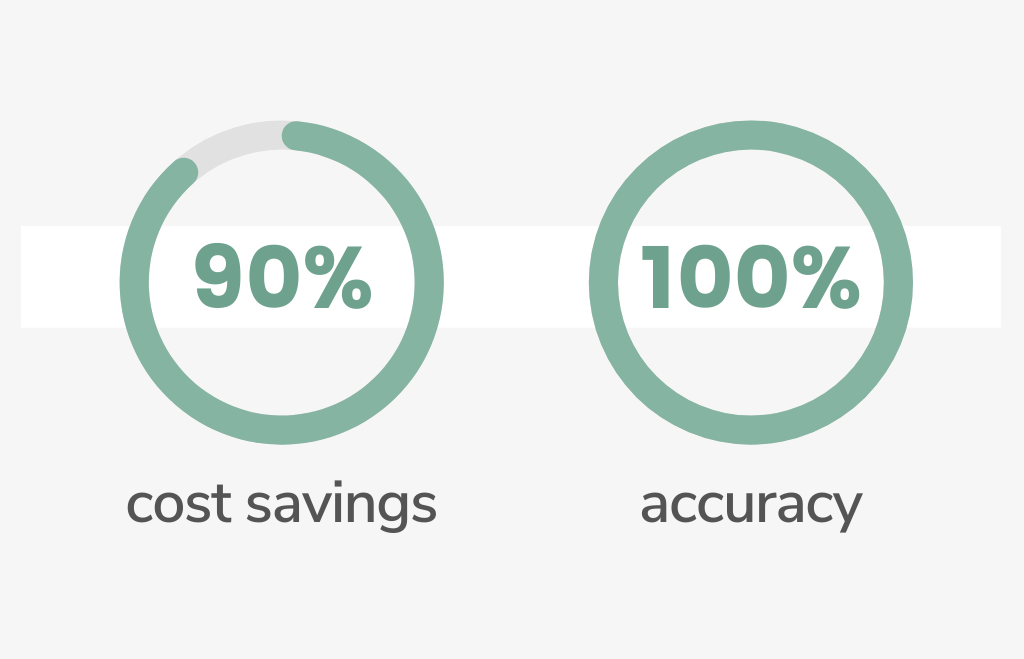

By choosing to with Pelican, the client saved 90% of the cost in the data validation process in comparison to the previous manual method. Millions of dollars saved by replacing 25 BigQuery engineers with Pelican.

The decision to use pelican also yielded the additional benefits of validating the whole dataset till cell-level each and every time when needed instead of the traditional method of sampling and one time-validation. All this ensured 100% accuracy in the data validation process and confidence in decommissioning the legacy system.

Recommended for you

subscribe to our case study

let your data move seamlessly to cloud

One Comment