Data is the lifeline of the modern business world. The exponential growth rate, at which data is generated, recorded, and churned every year, has left our planet saturated in information scattered all over. This downpour of data can be tamed by a Modern Data Platform approach and advanced technologies such as machine learning, business intelligence, and artificial intelligence, to turn data analysis into an innovative approach for strategic decision making. Eventually, the need for easy accessibility, scalable, and flexible data anywhere, anytime, can be addressed by building a unified data repository or Data Lake across the enterprise and making it available for analysis by everyone in the organization. The Data Lake based on the new edge technologies establishes a Modern Data Platform that resolves around the issue of scalability for handling this exponential data growth and otherwise unacceptably high costs.

But, Data Lake formation that is efficient and meaningful for businesses is one of the most common challenges that organizations face. There have been numerous instances of failure to set up an efficient Data Lake. According to Gartner, through 2018 80% of Data Lakes will not include effective metadata management capabilities, which will make them inefficient.

how datametica build an effective data lake on a modern data platform:

Know legacy system data access patterns:

With a prime focus on the future state data model for the way data is stored is a key foundational practice. However, in order to excel in this fast-paced data-driven business world, it is important for customers to set up an enterprise data lake that is user-friendly and inherently analytics-enabled. To provide a user-friendly platform, understanding the data access pattern is important, which can imply the future-state Data Model. When done manually, this is a very challenging and cumbersome process and is susceptible to major failures when upgrading the data platform. With their rich technical expertise and investments, Datametica has developed intelligent technology, called Eagle – Data Migration Planning Tool, that provides automated discovery of data access patterns across data stores, which recommends the future state data model and platform. Eagle further helps in tuning and optimizing the platform, data and access patterns.

Which modern data platform to choose:

The modernization of legacy data systems to Data Lake must address the legacy system limitations, expense, rigidity, scalability and responsiveness.

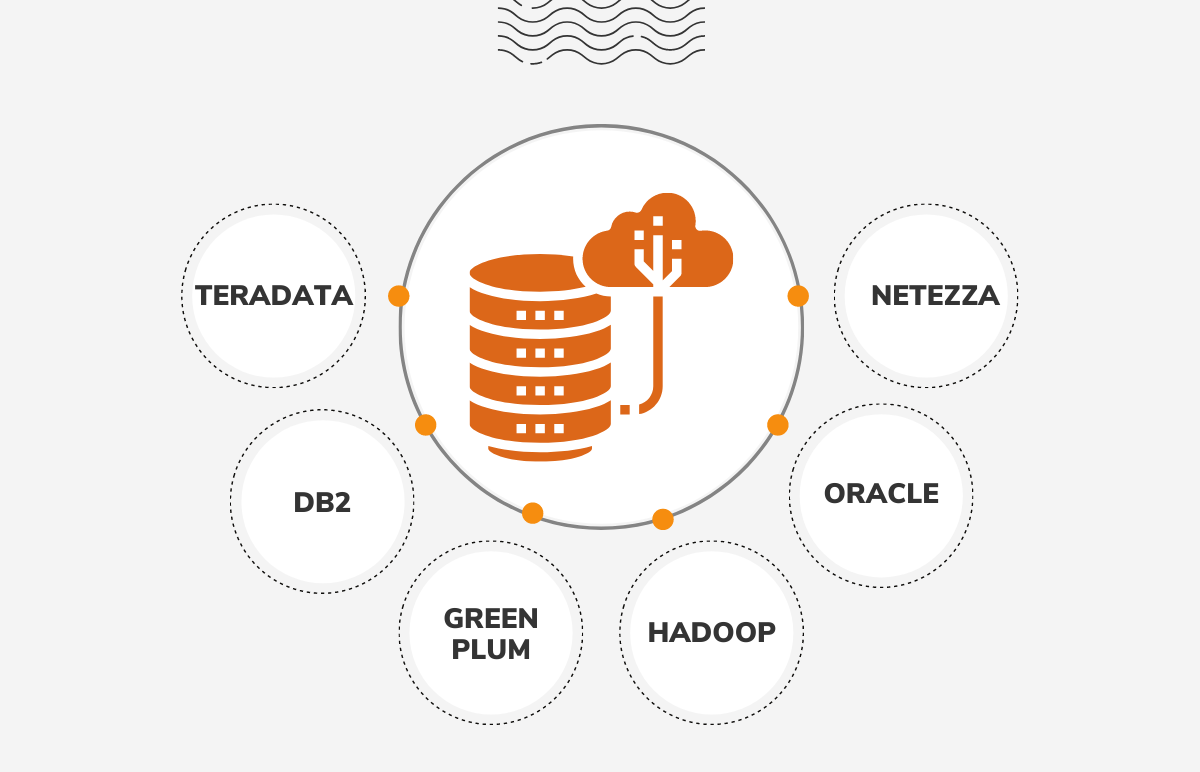

Datametica recommends a future state platform like Google Cloud Platform, Azure Data lake, AWS data lake and others, which leverages services such as server-less architecture, managed services, data locality, decoupling of storage and compute, cost transparency, pay-per-use model and rich library for analytics.

Strong data lake foundation set up:

It is important to set up a solid foundation for the Data Lake on the cloud, with defined protocols, processes, and tools as a baseline. Once the data lake architecture and optimized data model is prepared, there are several aspects that need to be decided such as determining the tools and technologies to be used, ensuring the data security of the platform, deciding the computational processes, setting up the serving layer and much more. In a nutshell, data lake foundation needs to be set up upfront even before any use case is started.

Data Ingestion on the data lake

To ascertain optimized data transfer in the data lake, a data ingestion framework can be implemented to integrate with various source systems such as Databases, CDC and logs data. The data integration is done in the ingestion layer, which will be built to handle various data patterns like batch, micro-batch, and real-time ingestion.

Datametica has devised a framework to onboard data from the various data sources such as databases, file systems and data streams to data lake. It brings a higher degree of automation to onboard data with zero manual work.

Workload modernization:

Once the data is onboard, the next step an enterprise should focus on is modernizing the workload running on the new data lake. While onboarding the workload, a requisite of code conversion may arise when there is a discrepancy in the code of your existing platform and that of the future state. This code conversion is efficiently done by using an automated code converter created by Datametica called Raven – Automated Workload Conversion Tool.

Validation:

Profile the data to detect anomalies. Understanding the taxonomy of data becomes important to achieve optimized data sets. With continuous data validation and direct workload comparison between the existing environment and the Data Lake, it is ensured that the curated data is properly migrated to the data lake. Datametica brings in an automated tool called Pelican – Automated Data Validation Tool, which can perform effective data validation without any physical data movement.

Security:

Keep track of data and access everywhere! When data is the foundation of your existence in the business world, you know the importance of keeping it safe. Data from multiple sources comes with new vulnerabilities. While offloading legacy data into the Data Lake, emphasis should be given to keep it secured for protecting the private and sensitive information.

Datametica provides protection to business-critical data to the maximum limit, by data encryption (both in rest or in transit), data masking, data ingestion security, access control, authentication and authorization, access node security, firewall installation and many more.

Data governance:

Datametica can help in implementing a sturdy Data Governance through a product called eCat which establishes efficient metadata management, the quality of the data and end-to-end data audit and lineage. This mechanism ensures that the data is consistently defined across the enterprise by fulfilling major gaps and missing functionalities. It is a collaborative, automated, intelligent platform that helps to navigate data at various levels in an organization.

Pertaining to the above parameters, an efficient Data Lake on a Modern Data Platform can be implemented, which will help optimize the operations of an enterprise. We at Datametica, help you in setting a Modern Data Platform and Data Lake that can effectively help you to save cost, scale your capacities, use your data for advanced analytics across the platform and modernize your business. With our unique toolsets and accelerators, we provide an end-to-end solution from assessment to ongoing maintenance, for migrating optimized data, workload, and use cases. Thus, ensuring effective deployment, reconciliation, and operation, the Data Lake implementation that we perform is a value addition for the enterprises.

Either follow the traditional way with legacy systems and compromise on your business capabilities or build an efficient enterprise Data Lake on the cloud through us and get ready to be at the front line of the contemporary business world.

.

.

.

About Datametica

A Global Leader in Data Warehouse Modernization & Migration. We empower businesses by migrating their Data/Workload/ETL/Analytics to the Cloud by leveraging Automation.