The Objective:

A major healthcare insurer in the US wanted to migrate from their existing Teradata EDW to GCP to leverage cloud-native technologies, lower operational costs and offer better risk mitigation. The objective of this engagement was to migrate their Teradata to BigQuery for storage, processing, machine learning, and analytics to leverage Google Cloud technologies.

Challenges: How to Build Efficient & Scalable Data Management, Reporting and Analytics System on GCP

The client wanted to organize and store their over growing data in a secure, scalable and cost-effective manner in order to meet the business needs. Another objective was to make the data available to all the users (in the thousands) for reporting and further build a more modern and efficient advanced analytics ML capability.

The Solution: GCP Foundation Setup with Teradata EDW Migration to Google BigQuery

- Datametica set up a cloud platform and delivered cloud migration services on the Google Cloud platform

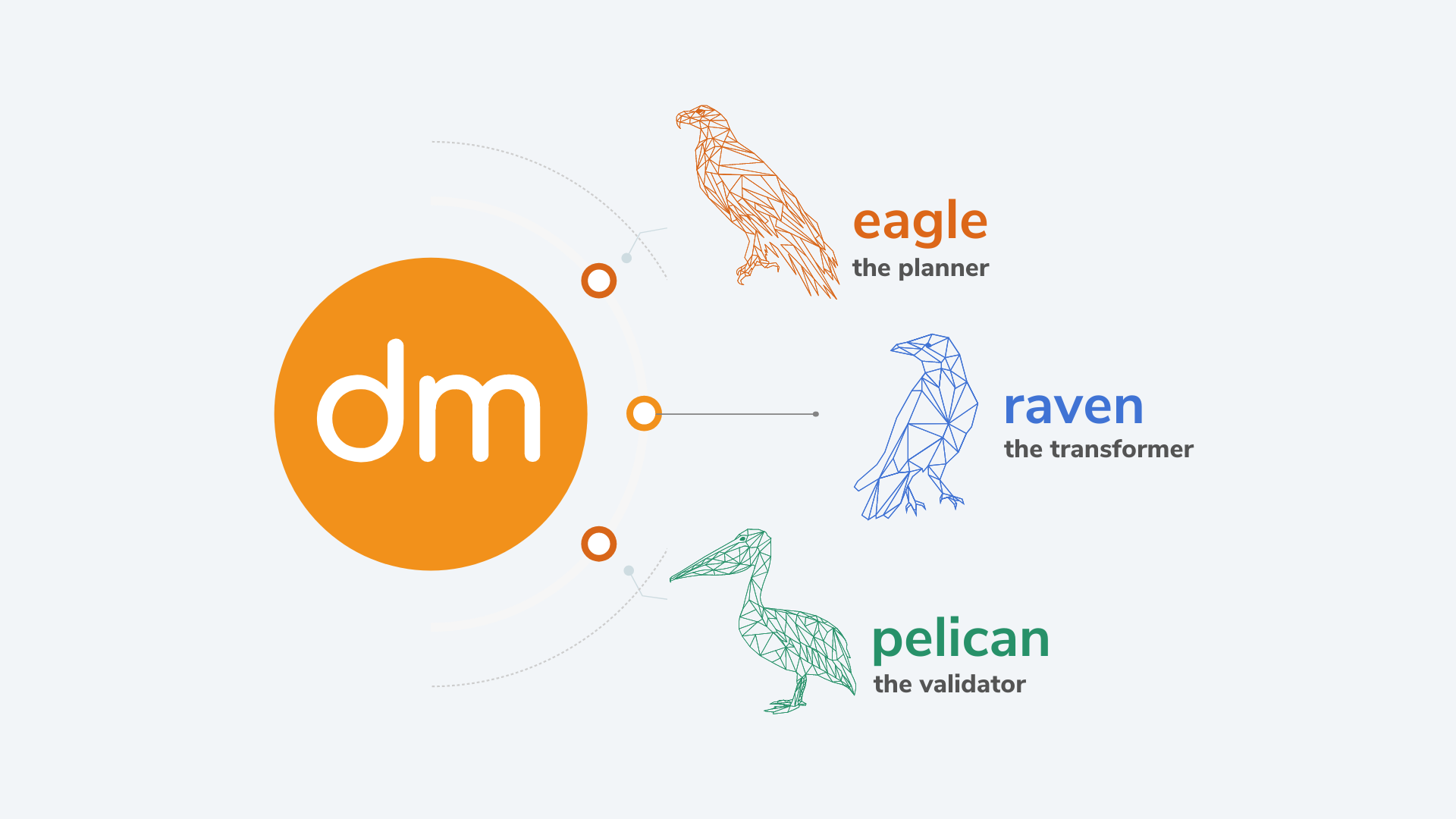

- For the initial assessment of the Teradata environment Datametica used their automated assessment & data migration planning tool – Eagle to define data transfer sizing and understand datasets and table structure. This helped in identifying the key data sets, tables and data pipelines that should be prioritized during migration.

- Set up initial GCP foundations based on the business requirement of the client

- Historical data migration from on-premise Teradata to GCP

- Build batch pipeline to load data to GCP BigQuery as part of side car approach to enable early onboarding of users

- Setup orchestration and scheduling using Cloud Composer.

- Code Conversion of Bteq Scripts, Stored procedure, DB objects such as views, functions, Teradata table DDL to equivalent BigQuery (BQ) using the Datametica Automated code (SQL, Script) and ETL conversion Tool – Raven.

- Code Conversion of Datastage jobs using Raven

- Data validation using Datametica’s Automatic data validation tool – Pelican, to validate and ensure historical data synchronization, incremental data loads based on converted code during migration match at a table, row and cell level for production parallel runs between Teradata systems to Google BigQuery

- Provided support to the client for the UAT testing

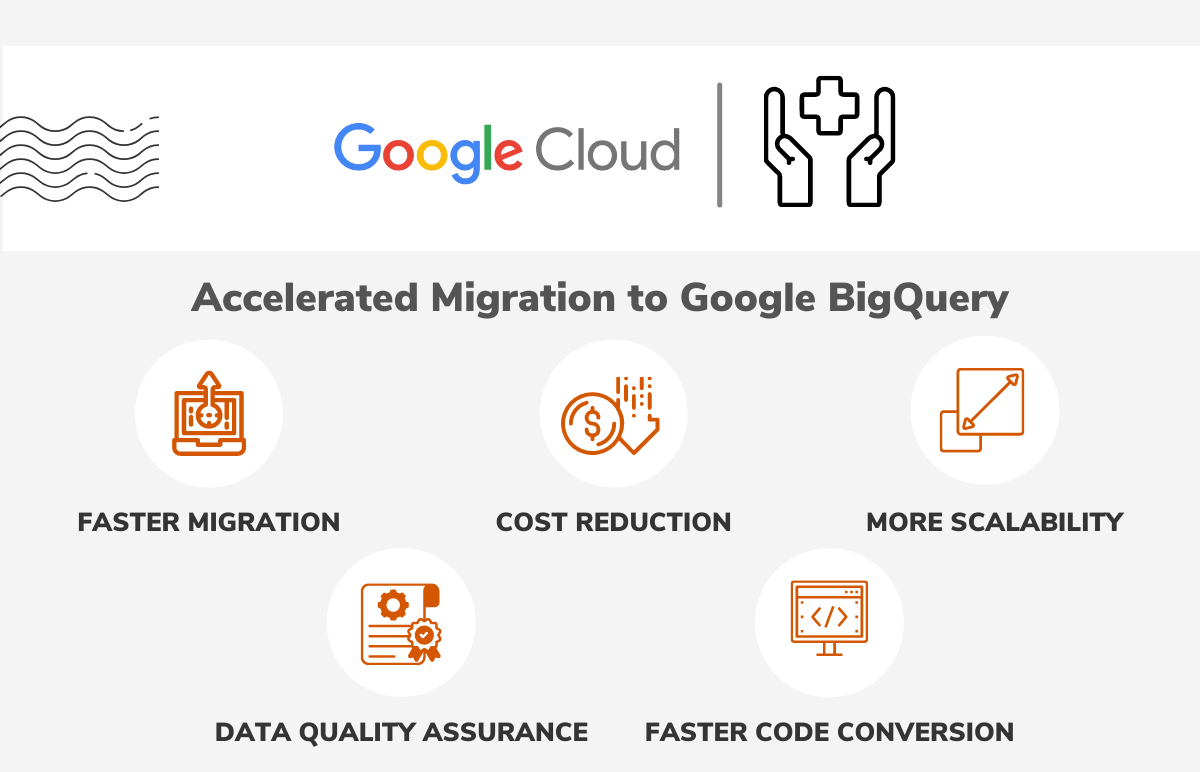

Benefits: Accelerated and Cost-Effective migration to Google BigQuery

- Faster migration with the help of Datametica’s automated data migration tools

- Reduced cost for the cloud optimization process

- More scalability and modernize data management – Analytics data scientists and user clients are no longer constrained by system performance

- Rapid Assessment using Eagle to structure the movement of workload, users and data to GCP

- Faster Code Conversion of associated TD SQL/SP’s into native BQ SQL/SP via Raven

- Ensured 100% data accuracy between Teradata and GCP via Pelican resulting in guaranteed accurate synchronization between the platforms during migration

- Pelican delivered data quality assurance for decommissioning of the legacy system

Datametica Products Used

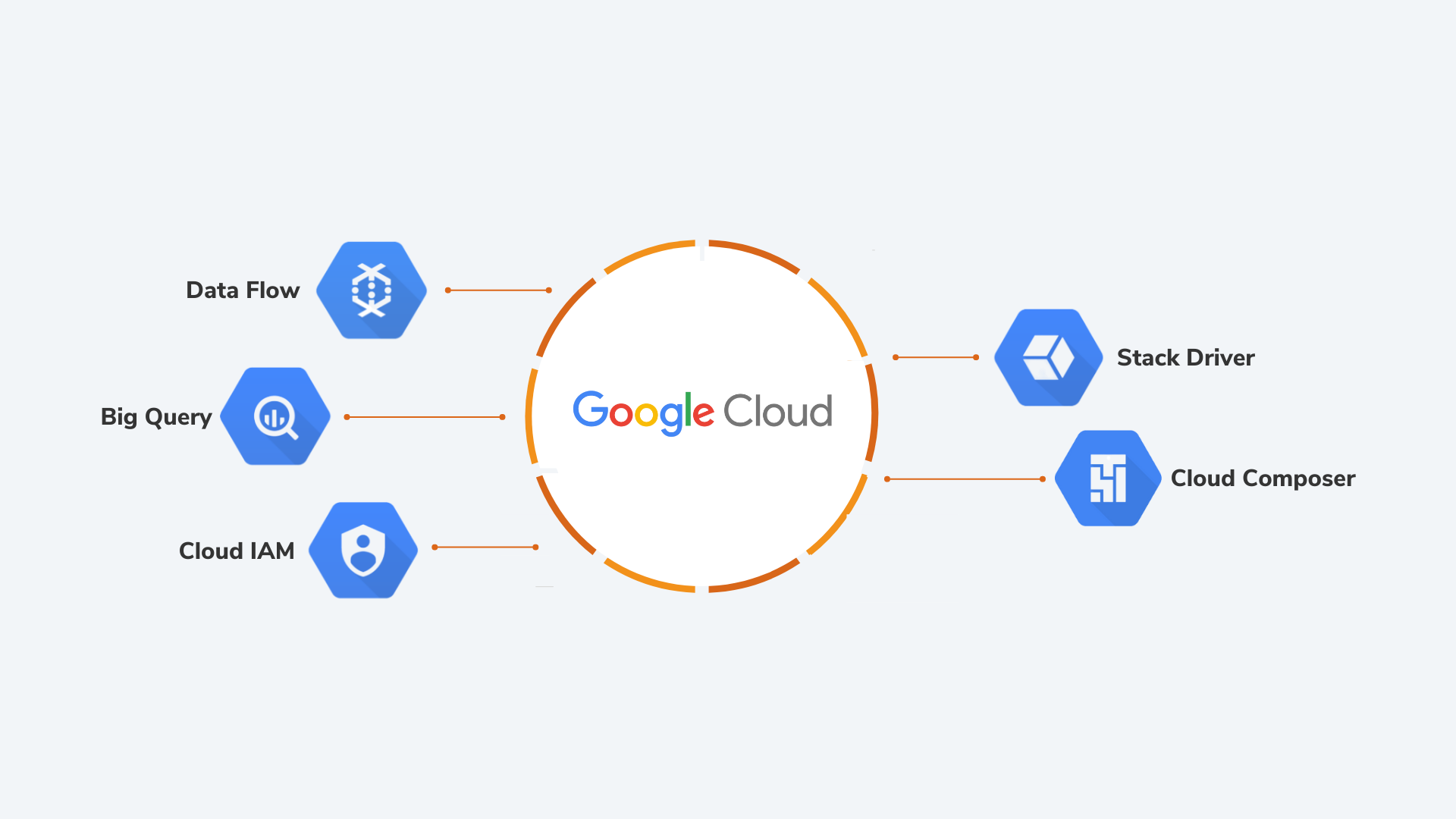

GCP Products Used