Objective: Retire Legacy Data Warehouse by Moving to the GCP to Enhance Operational Performance

Datametica’s customer, a manufacturer and retailer of products across multiple categories, sought to modernize their legacy data warehouse, Teradata. To achieve this, they planned to employ Google Cloud Platform, along with BigQuery, Cloud Composer, and Dataflow.

The aim of this initiative was to:

- Modernize legacy on-prem Teradata Data warehouse to Google Cloud Platform.

- Convert all the Teradata workloads (DDLs, UDFs, BTEQs, etc.) to the Google Cloud Platform – BigQuery.

- Convert the existing Informatica ETL workflows and mapping to GCP native technologies.

- Boost operational performance and achieve scalability.

Challenges: Performance and Time-Related Issues

The client had low performance while running data on on-premises Teradata and Informatica ETL. To address this and improve their cost management, analytical reporting, and build real-time use cases, the client decided to leverage GCP native technologies.

Solutions: Design GCP Future State Architecture

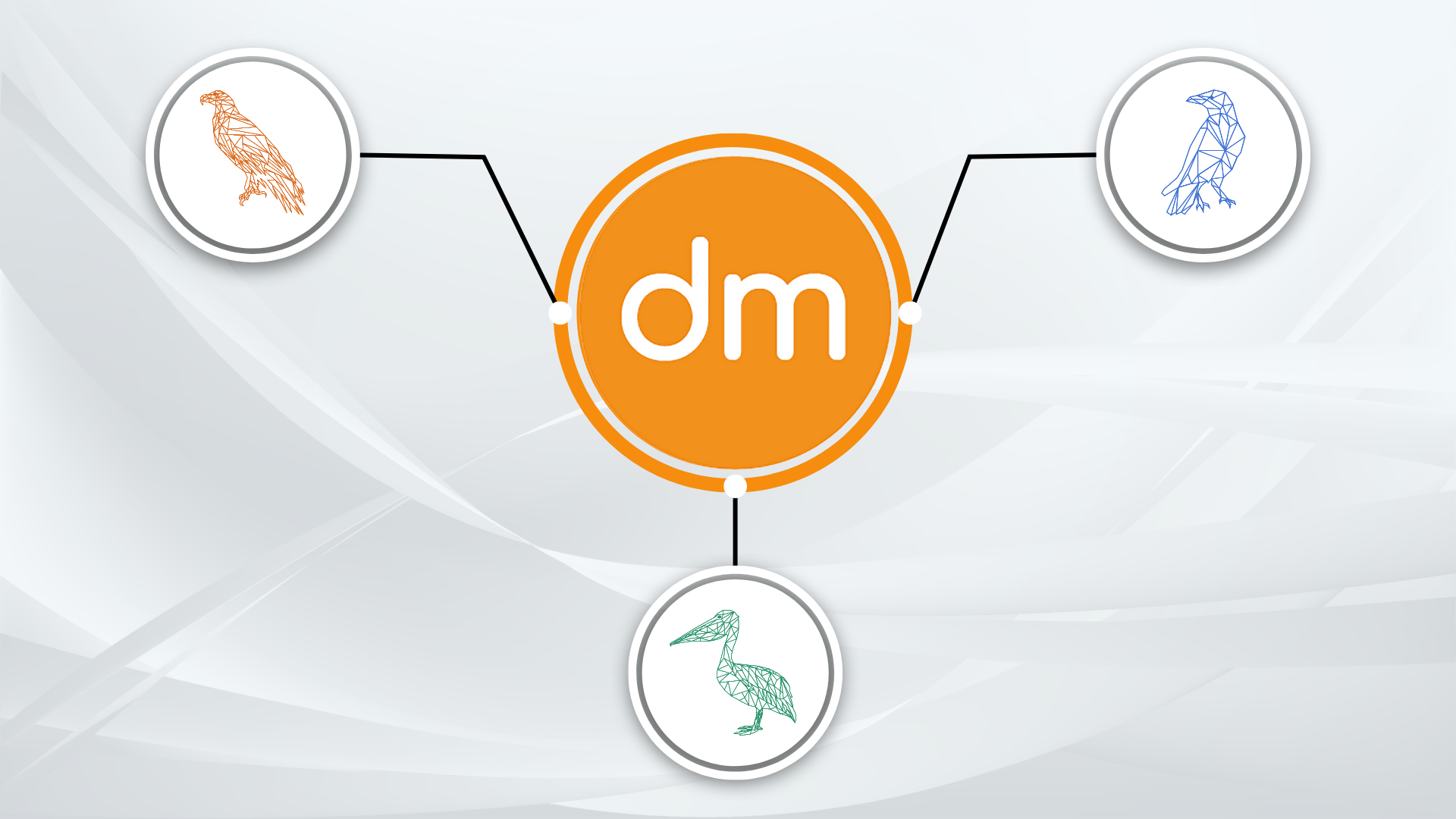

- Datametica leveraged Eagle product for the assessment of Teradata and Informatica ETL to uncover unknown complexities, traced end-to-end data lineage, and tracked data dependencies. It helps in building a sprint based migration plan and a roadmap to GCP.

- To efficiently meet the client’s long-term GCP objectives, we built a GCP foundation that adhered to GCP best practices and recommendations while also addressing security and auditing requirements.

- In GCP foundation Setup included: Sandbox, Dev, Pre Prod and Prod Environment, Firewall, IAM Rules and Private service connect (a private entry point from which all Google API requests are routed).

- In this modernization exercise, we redesigned the data model, architecture, transformed (re-write) the code, and leveraged GCP native technologies by retiring existing technologies like Informatica and Control M. This approach led to significant improvements in overall performance and cost savings for our client.

- 100% Conversion of Teradata tables, view DDLs, UDFs, Macros, Stored procedures, Informatica workflows, Informatica codes, BTEQs, and Shell Scripts to GCP native using Raven.

- Automated Conversion of Informatica mappings and workflows to BQ SQLs/Dag with Raven.

- Transformation and Lookup logics were converted to BQ, also data ingestion pipelines were refactored for GCP.

- Dataflow was used for data ingestion, and all the orchestration (Control-M) and scheduling jobs were converted to Cloud Composer.

- The reporting tool – Microstrategy was repointed from Teradata to BQ. This involved repointing of the Schema from TD to BQ, conversion of pass-through functions, legacy filters to BQ equivalent SQL.

- The logical tables were changed as per the requirements of the MSTR report and numerous reports and cubes were validated.

- Pelican was used for 100% automated validation of migrated BQ tables and views against the Teradata source.

- Features of Pelican like, Parallel Run and Cell level validation contributed in faster, secure, and accurate validation.

Client Benefits: Faster Cloud Migration, Optimized Performance, and Time with Unlocked Futuristic Capabilities

- Significant improvement in operational performance when compared to legacy Teradata platform, through automated GCP migration. (A TD cube that took 46 minutes to refresh on Teradata, took only 4 minutes to refresh on GCP BQ).

- 45% faster migration to Google Cloud Platform.

- Datametica’s automated products reduced the migration cost by almost 40% and associated business risks.

- Repointing the reporting tool to GCP resulted in visible improvement in the report execution time.

- Regression testing resulted in 100% accurate, historical, and incremental cell value validation.

DM Product Used

GCP Product Used